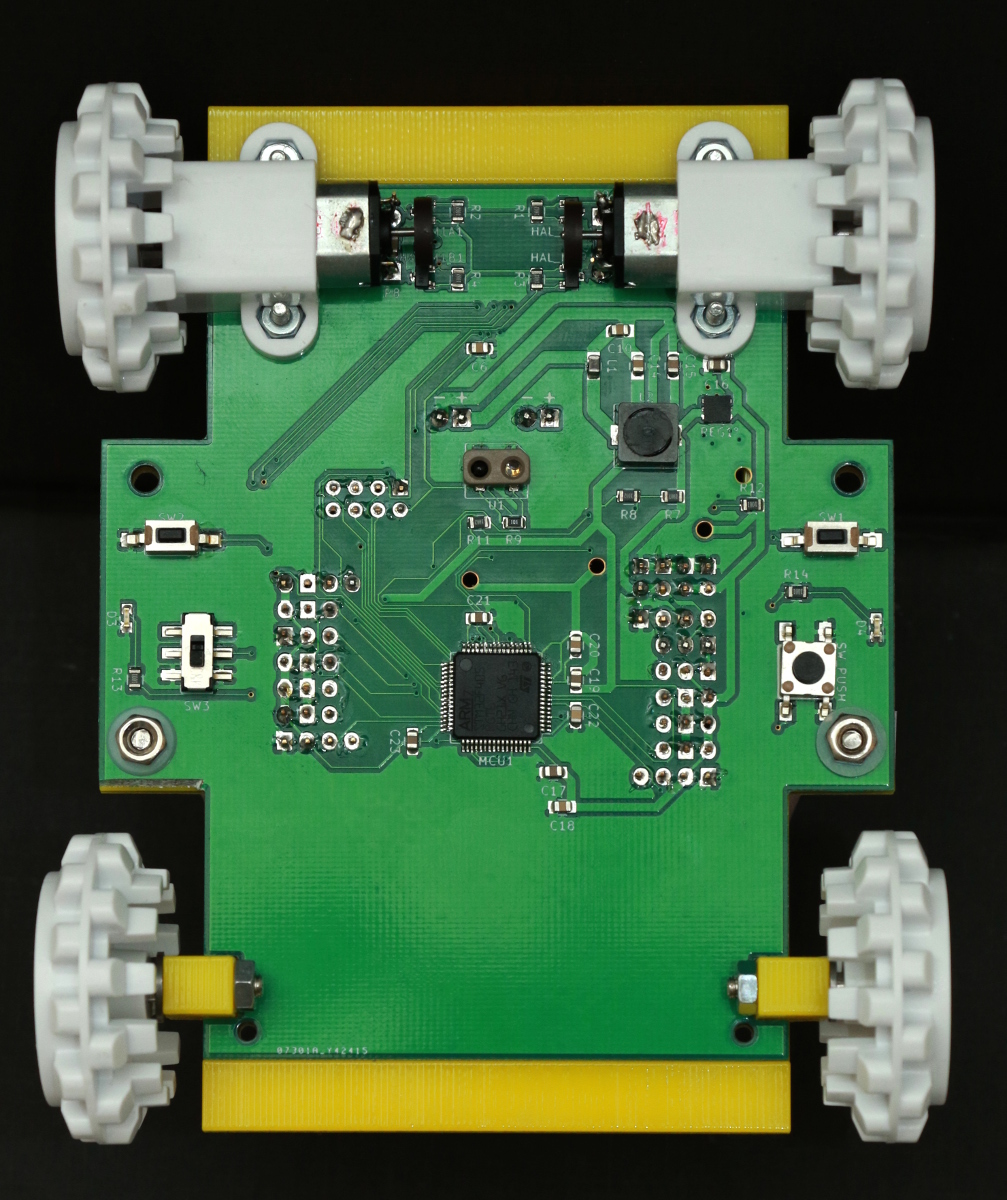

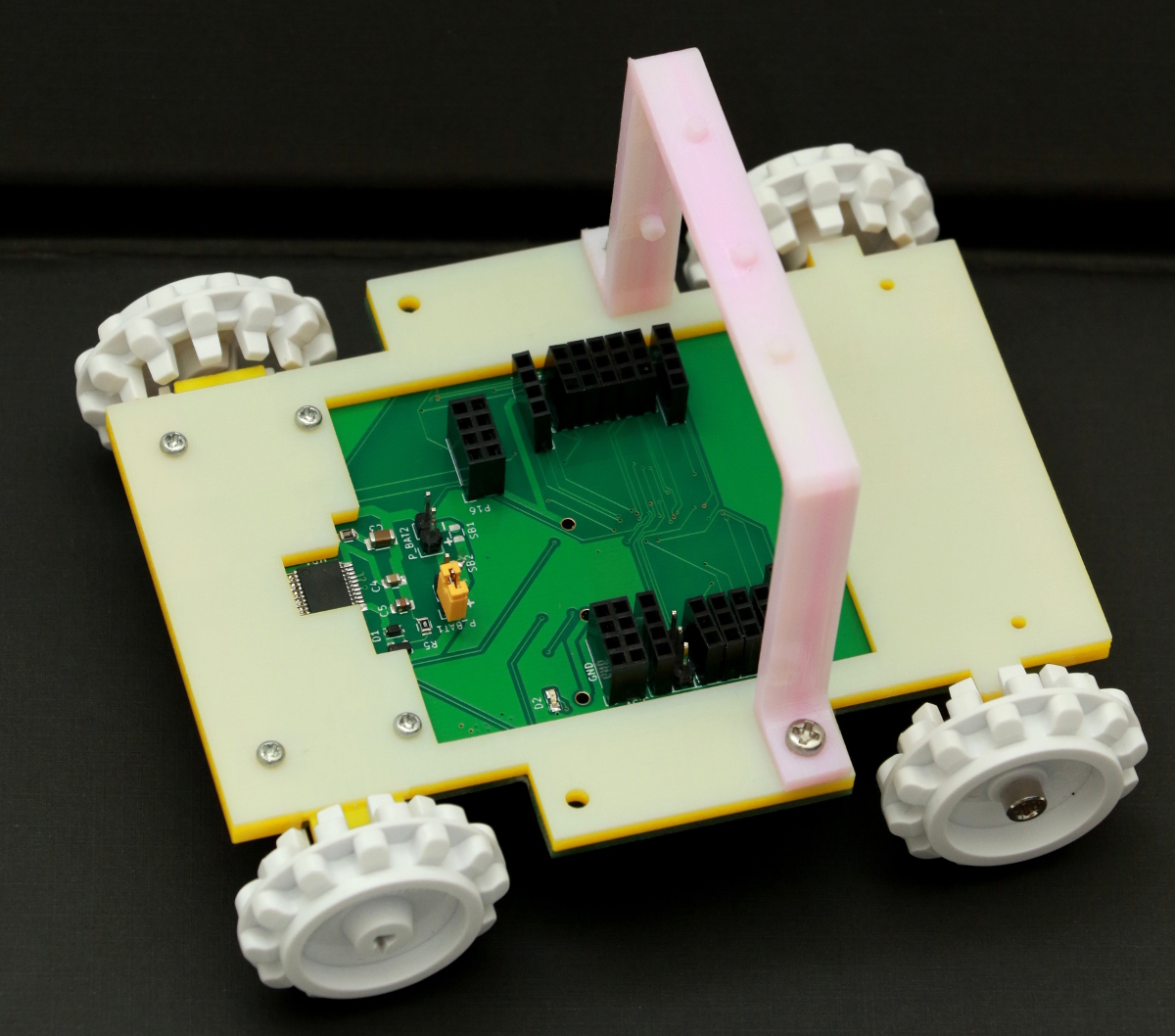

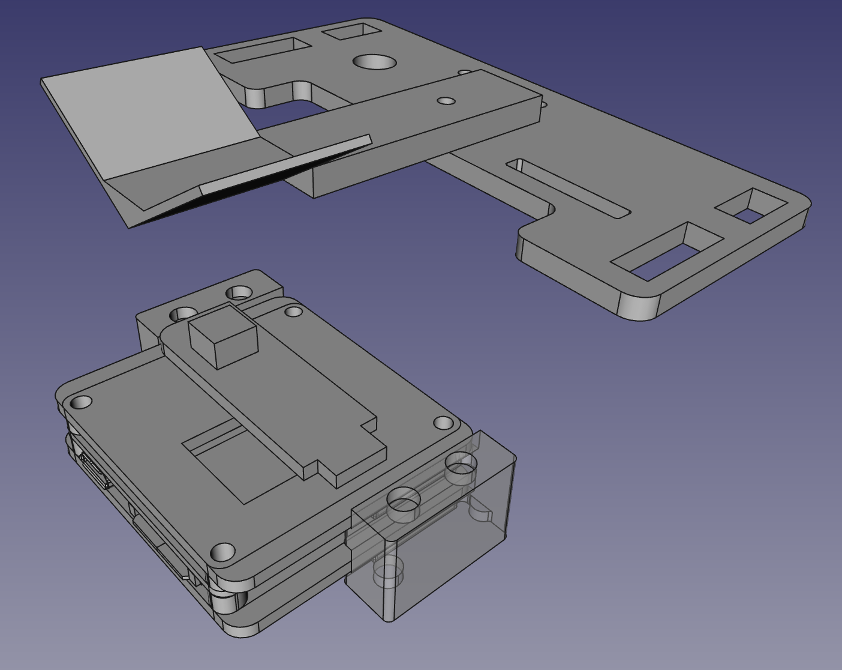

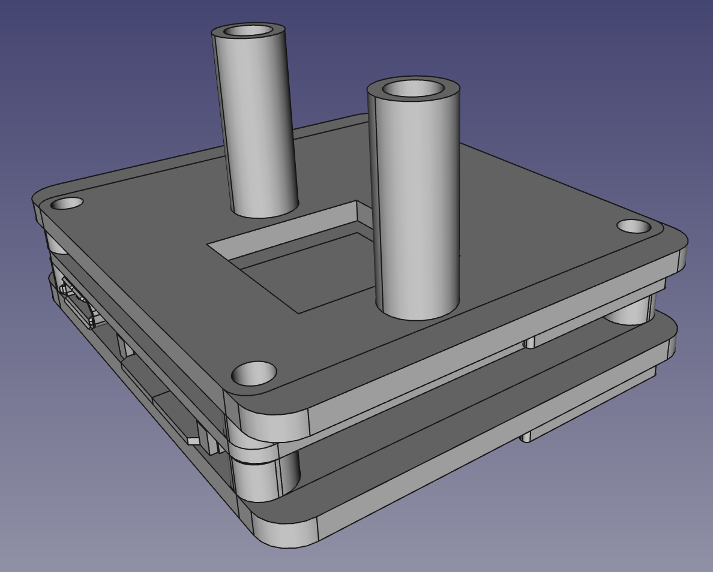

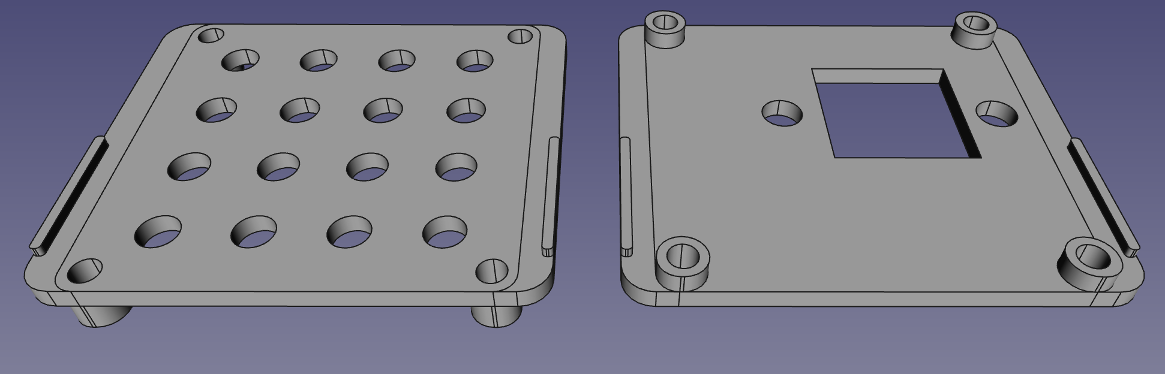

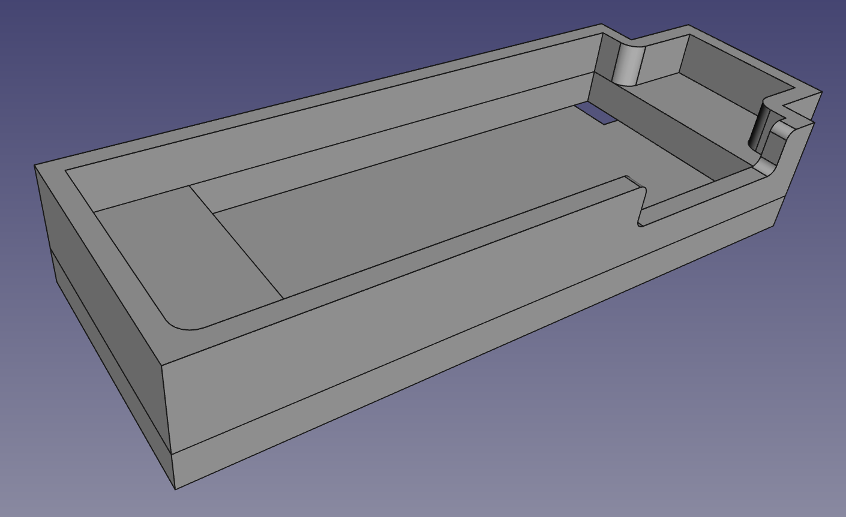

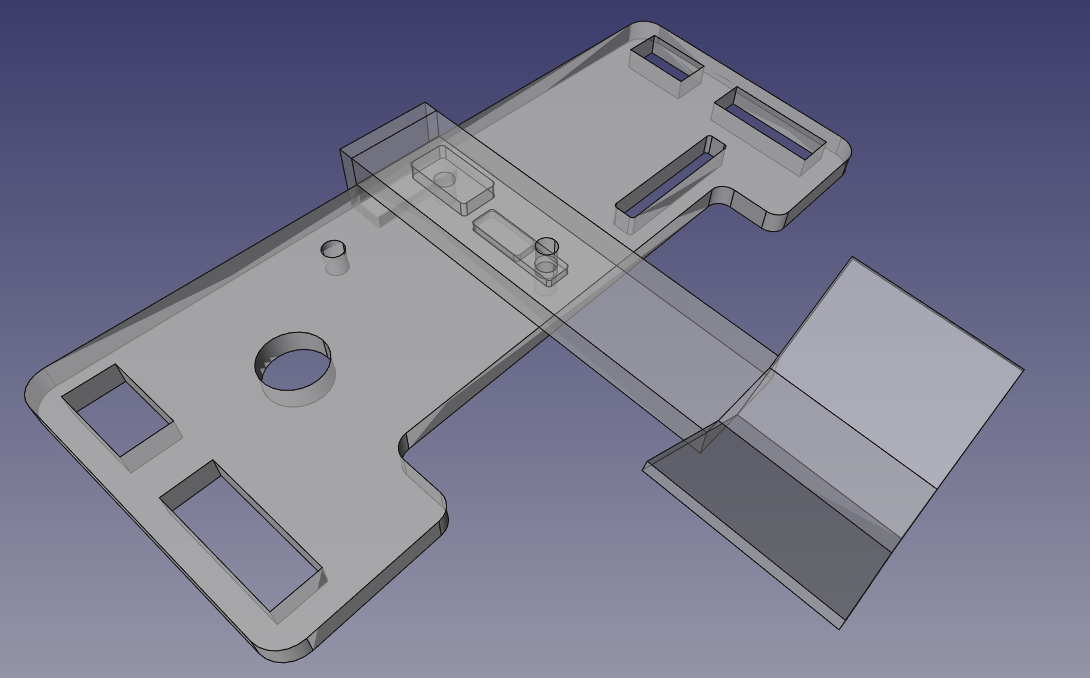

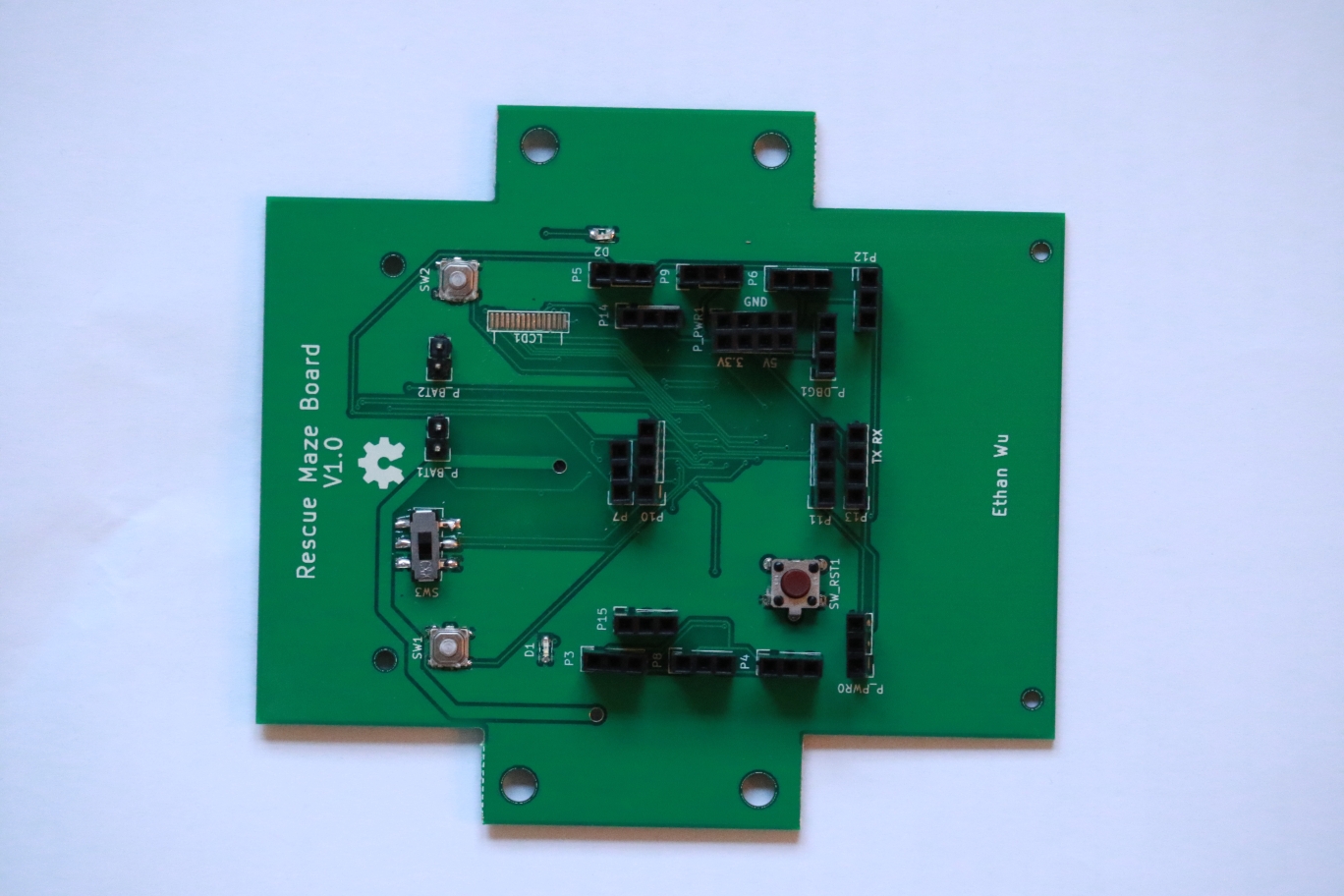

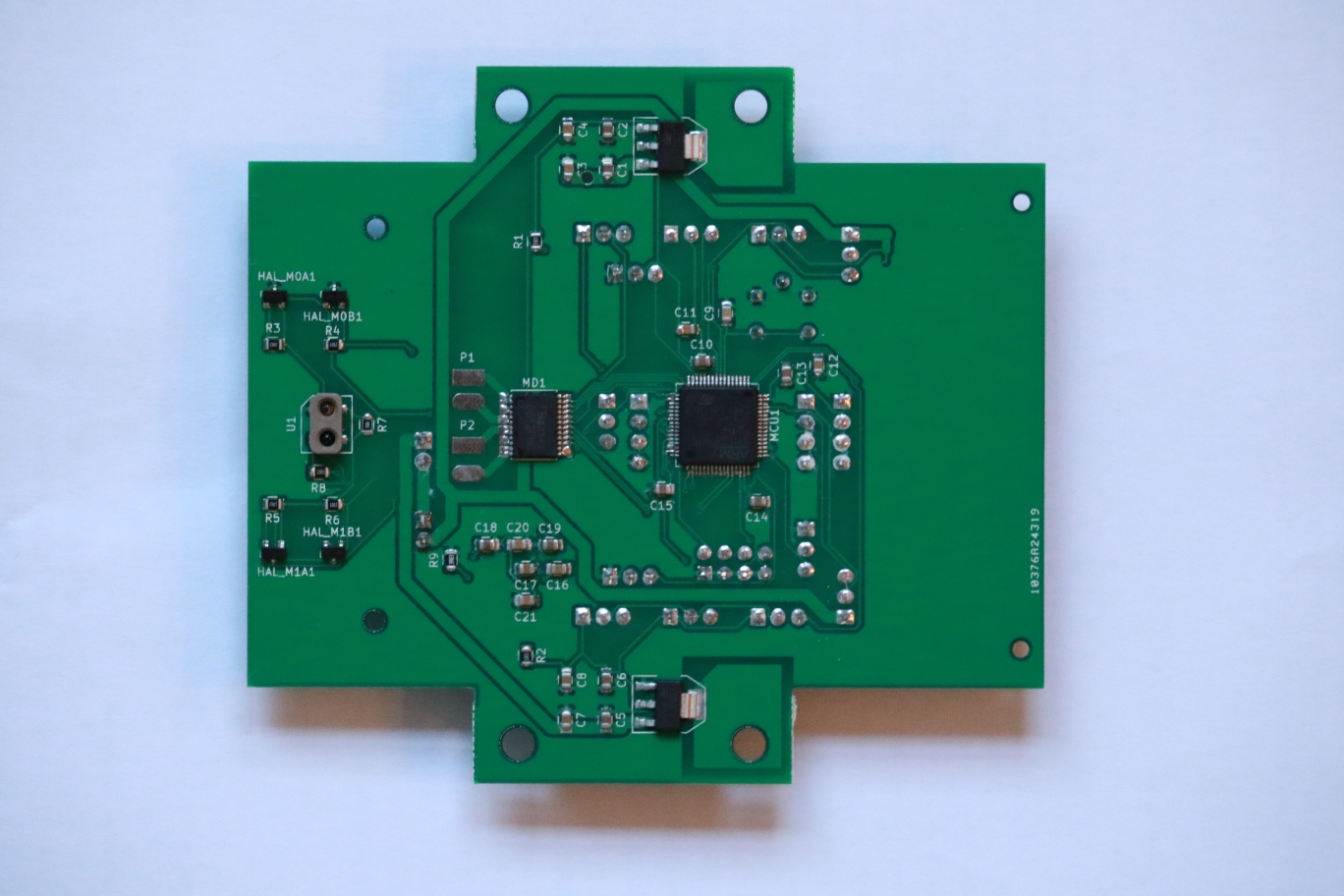

Nov. 23 - 29, 2015Circuit board prototype (V1.0) assembly

Goal

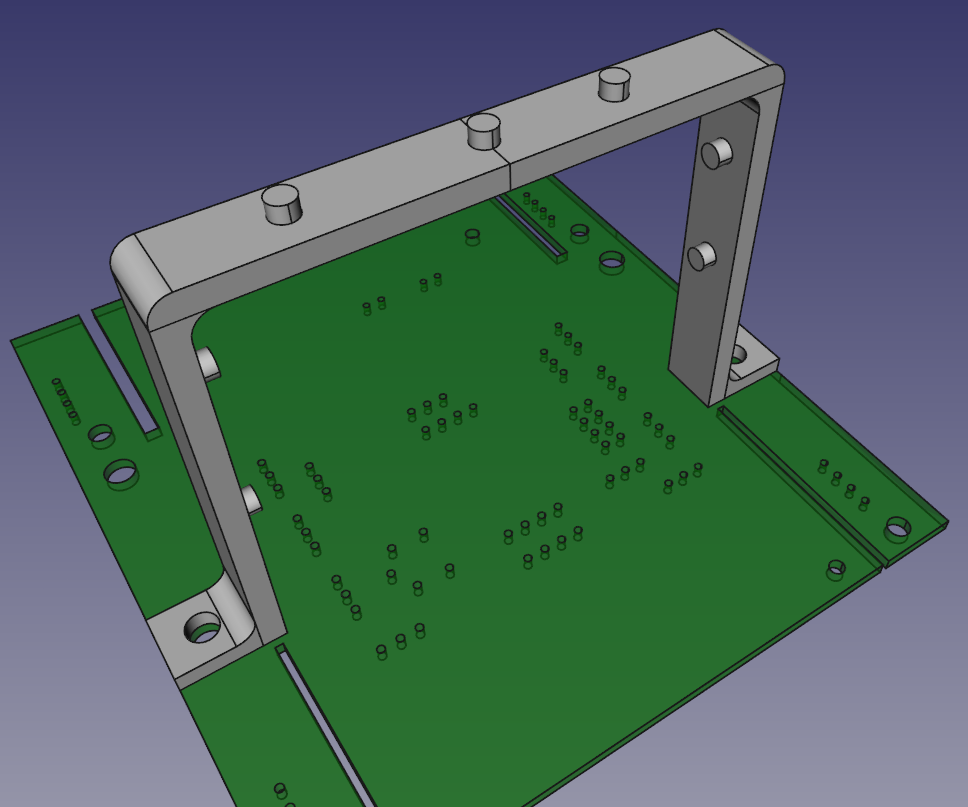

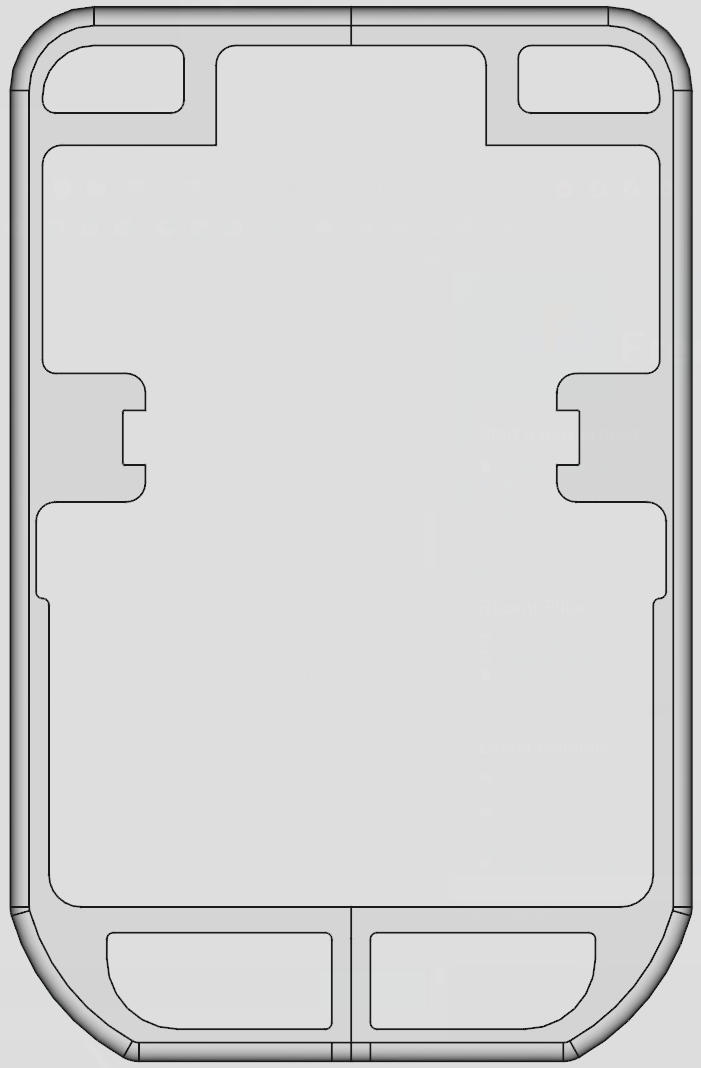

Assemble PCB

- Apply solder paste and place components (about 1 hr)

Reflow board in toaster oven

- Solder paste melted at ~190°C, took out at ~210°C

- Data-logged thermal profile:

cleaned up excess solder bridging on MCU and motor driver

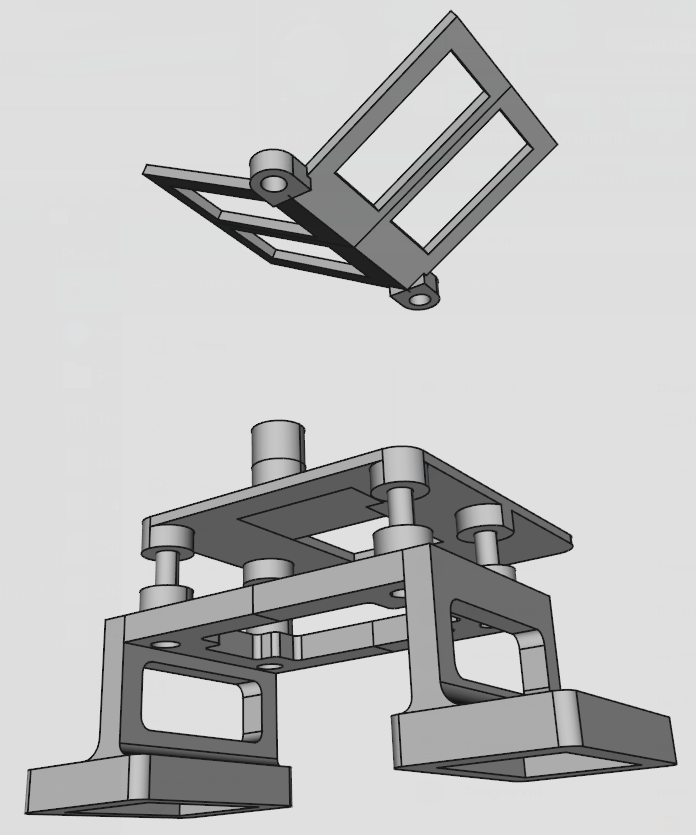

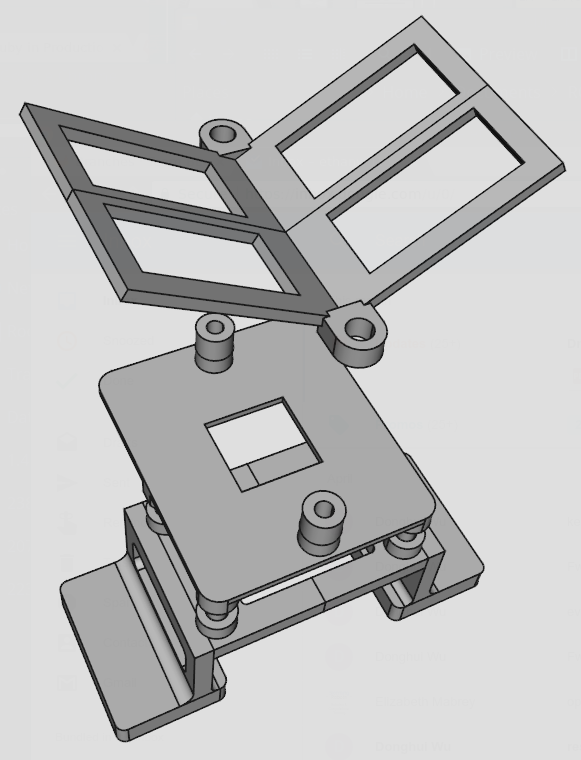

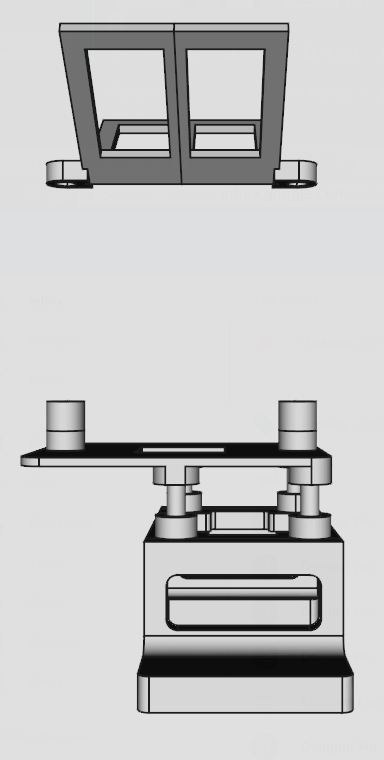

- Soldered pin headers, motors, and buttons by hand

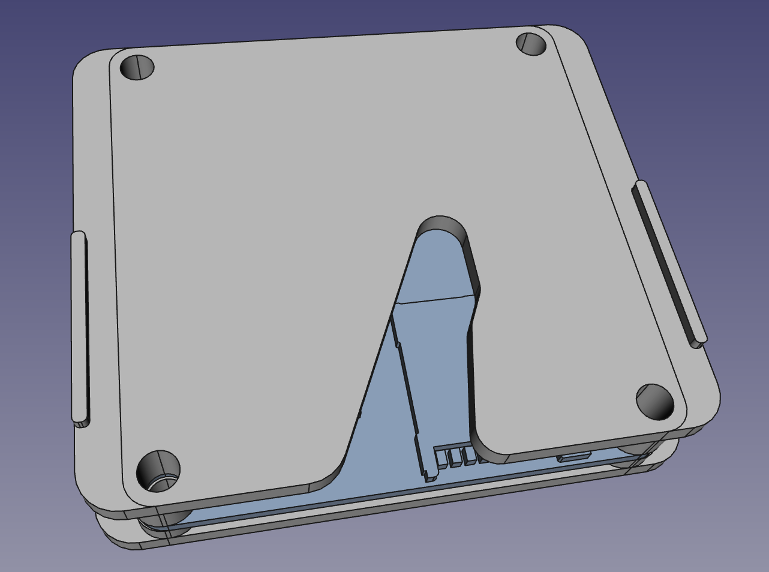

Assembling circuit boards on Thanksgiving

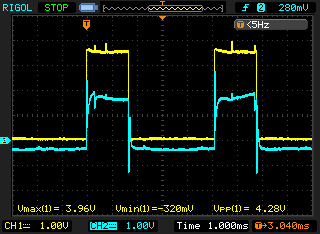

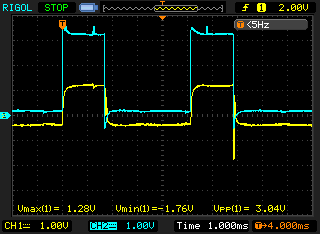

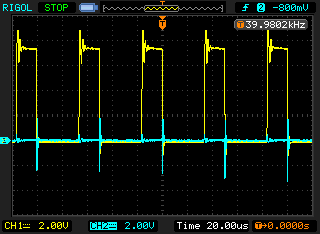

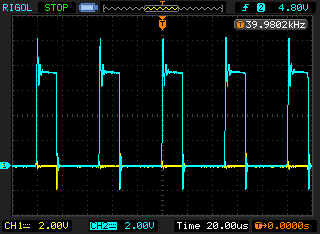

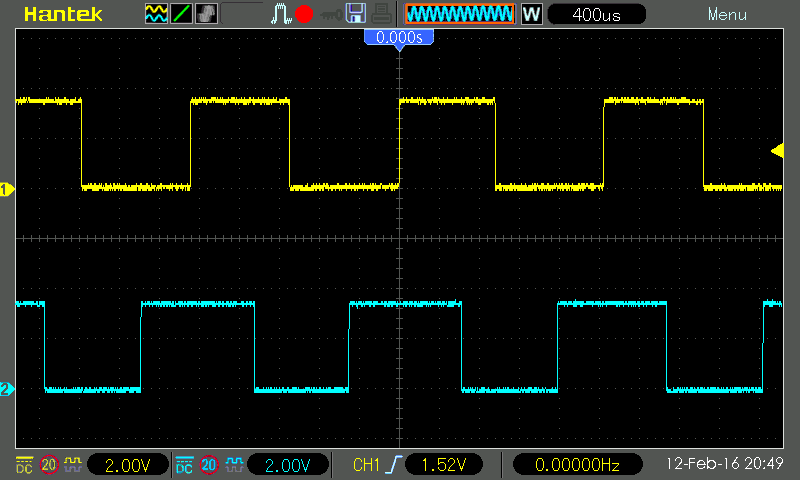

Testing PCB

Supply with constant voltage and current power supply @ 6V

- Able to program MCU

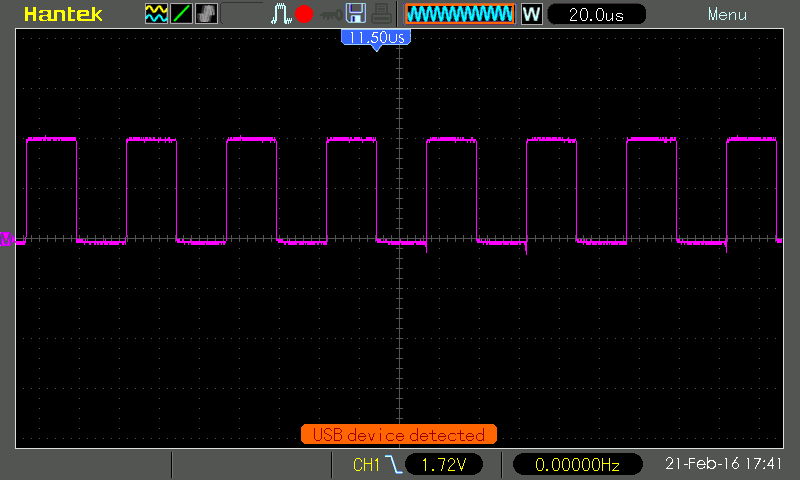

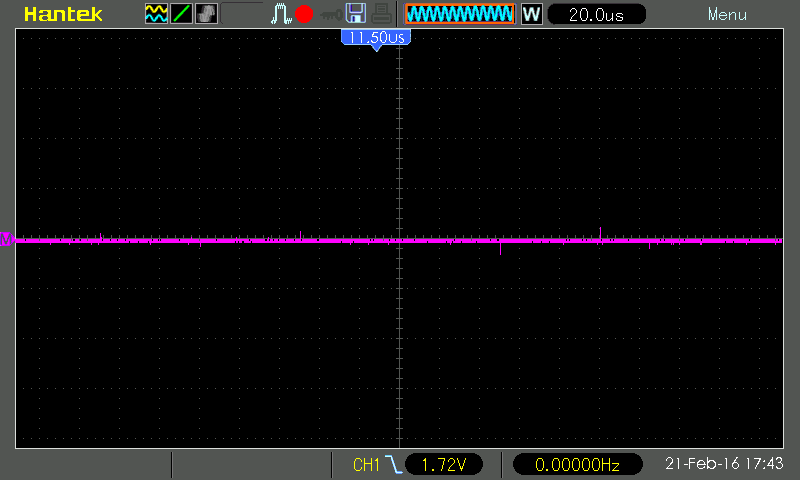

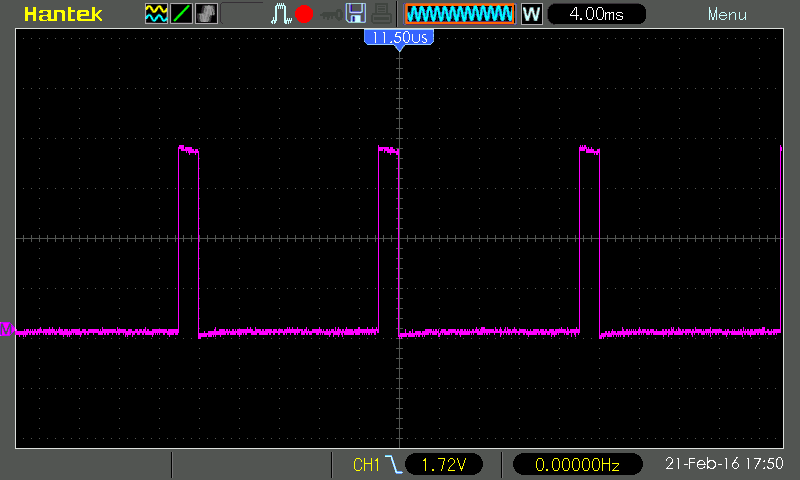

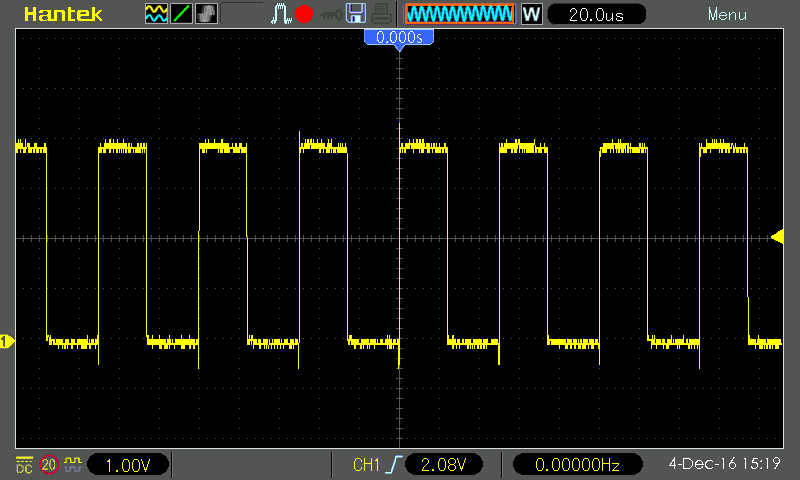

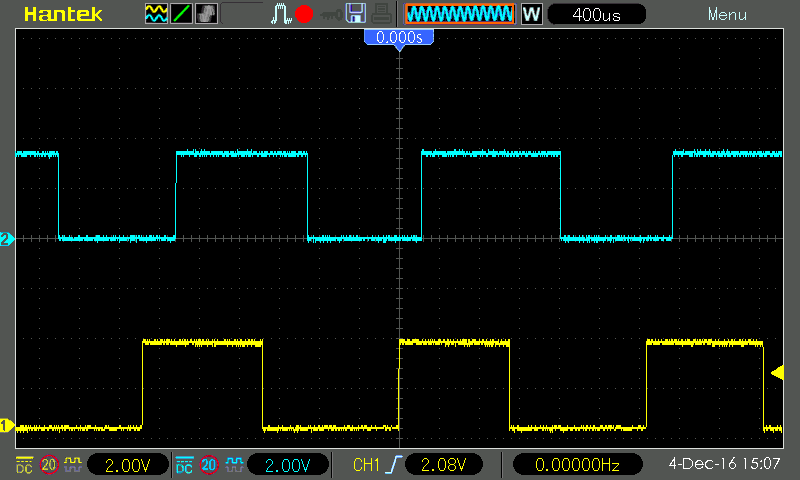

- MCU peripherals (internal clock, timers, ADCs) work

- Motors spin (at low speed)

- Temperatures on MCU and motor driver are low (<30°C)

No issues so far, so increase to 7V

- Still no problems, seems that everything is good!